Haptic technology is a rapidly advancing area of research. It deals with the sense of touch, which is actually several distinct types of sensations. Haptic experts describe at least 9 haptic dimensions a human can discern from objects or environments: hardness, texture, temperature, weight, volume, shape, contour, motion and function. These dimensions are experienced by a person via many unique sensors distributed in varying degrees of resolution across the human body.

Today’s robots contain a multitude of sensors (though not as many as humans), enabling them to have a more complex understanding of their surrounding environment, including the objects within that environment with which they can interact. Haptic technology can allow a robot enhanced functionality such as calculating the correct amount of force or strength needed to engage with an object without damaging it. Using haptics sensors, an autonomous robot with the correct programming can determine the difference between a fragile egg and a pool ball and adjust their grippers or end effectors to pick up such objects with exactly the right amount of force necessary for a successful interaction. In the last few years, the field of haptics perception in robots has received a great deal of focus.

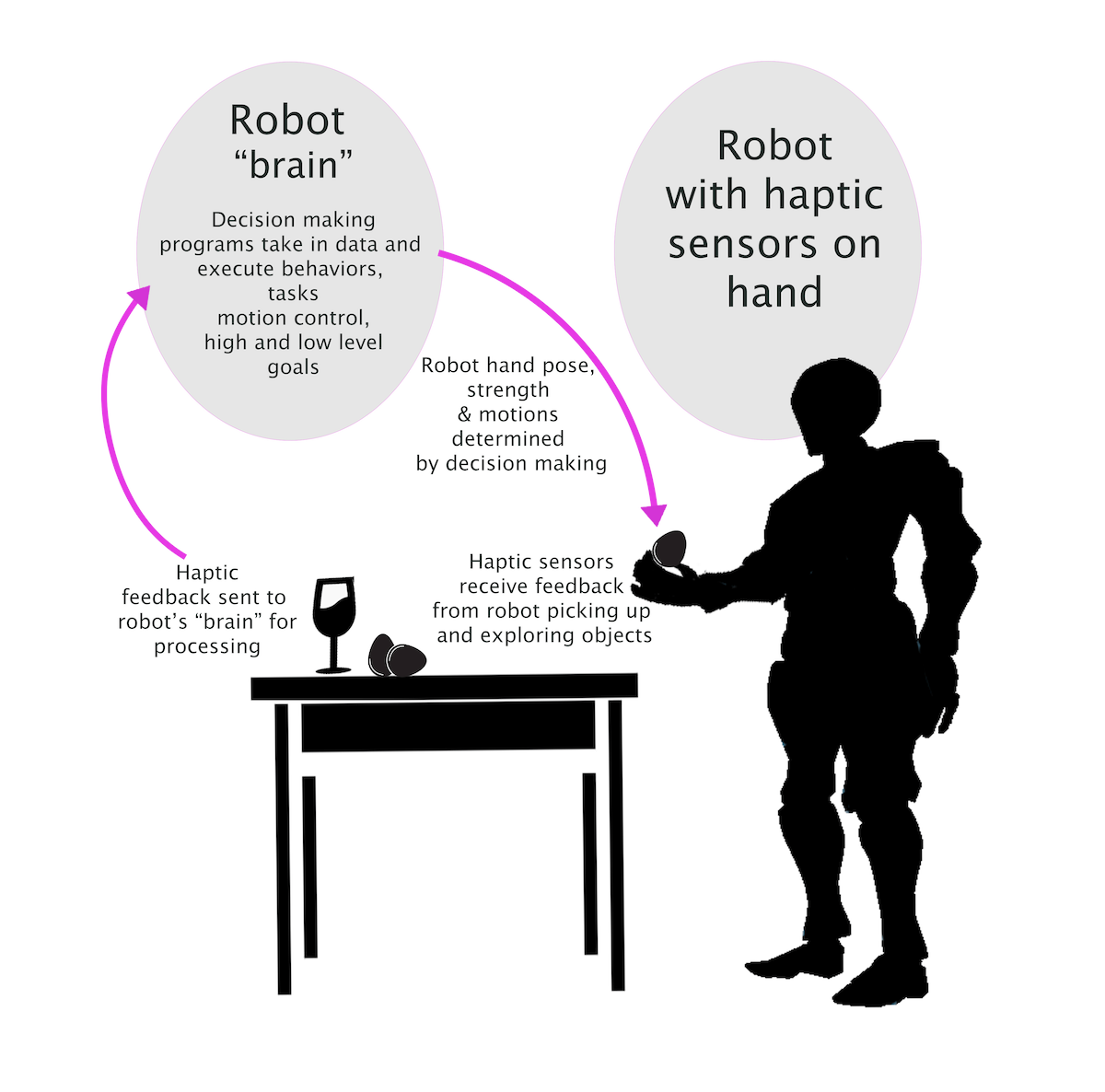

A typical robot includes programming that takes in haptic data –such as shape, texture, weight, temperature, and surface strength– processes it, and then determines what its next actions should be. The loop between these sensors and the way the robot ultimately executes actions must be finely coordinated and, especially with haptics sensors, this linking of sensing to action requires a detailed closed loop sensorimotor control system. Modern robots include such loops to determine ongoing actions. This is what allows them to be autonomous. (See Figure 1)

Figure 1: Control loops for a standard robot equipped with haptics.

And yet, an even newer scenario has emerged. This scenario involves non-autonomous robots that are controlled primarily by a human in the loop. This category includes (as a baseline) programmed robots such as Disney’s animatronics, in which the actions are consistent and repeatable once the code is downloaded into the robot’s “brain” (used very loosely). However, more recently, this control can and does happen in real time, where the human, in an ongoing response to the robot’s environment and situation, makes the choices that the robot then executes correctly in that world. In this scenario, the human is replacing the autonomous robot’s “brain”.

Human control of robots is not new but has taken somewhat of a backseat to work on more autonomous robots in mainstream robotics. Historically, robots like those used in Disney’s early animatronics were controlled by programs that executed the robot’s actions along a repeatable, preset path. Many techniques were used to create the program (typically a series of commands to servo motors in the robot’s individual joints) but it was complicated to bring all of the various recorded motions together for smooth, believable movements. Disney engineers found that it was possible to have a human performer wear a harness that measured complex joint actions, even though it often took the performers many tries to obtain the most believable motion. These animatronic characters were not interactive in any sense, because the robot simply repeated the programmed actions in a loop.

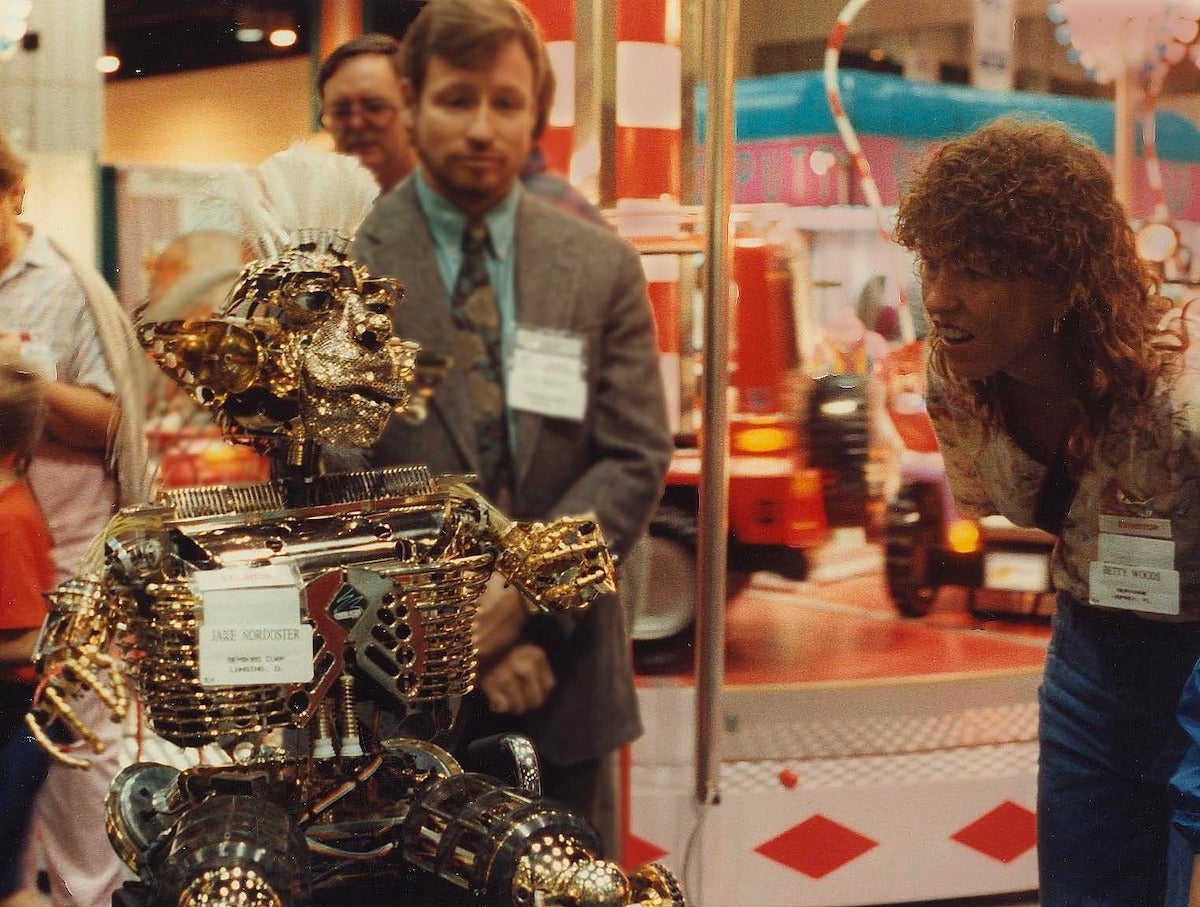

By the 1980s, sensor development had made it much easier for a human to control the motion of a robot in real time, and this technique started to be used more widely, primarily for entertainment. An example of such a character being controlled by a human puppeteer is shown in Figure 2.

Figure 2: A human controlled robot character at the 1991 IAAPA Expo in Orlando, FL. The human is in another room, taking in visual and audio data from the robot to determine what to do or say next.

A new challenge to advance human control of robots is exemplified in the current ANA Avatar XPRIZE. The goal of this competition is to create a physical Avatar that can allow a person operating the robot from a distance to feel as if they are truly present at the robot’s remote location. Through the Avatar, an operator would be able to see, hear, and physically interact with people or an environment at some distance. When the technology is integrated correctly, the person controlling the Avatar feels themselves to be situated at, or transported to, that distant location in a manner that includes a physical component through the body of the robot. Achieving this “aha” moment is still rare but is the ultimate goal of the teams competing in the ANA Avatar XPRIZE.

Because a robotic Avatar utilizes the robot’s physical body as a stand-in for the body of the person controlling that Avatar, it is necessary to convey many types of sensory data between the two. Conveying audio and visual information has been well researched and is widely available in applications such as video conferencing, drone technology and many existing robots. However, haptic technologies are less mature and the sense of touch much more complex to transmit than audio and visual data, especially as it has so many dimensions. Creating mechanisms that can properly relay some of the many aspects of touch is challenging, even in autonomous robots. Integrating these devices into a functioning Avatar system, with real-time information being relayed back to the Operator, is even more difficult.

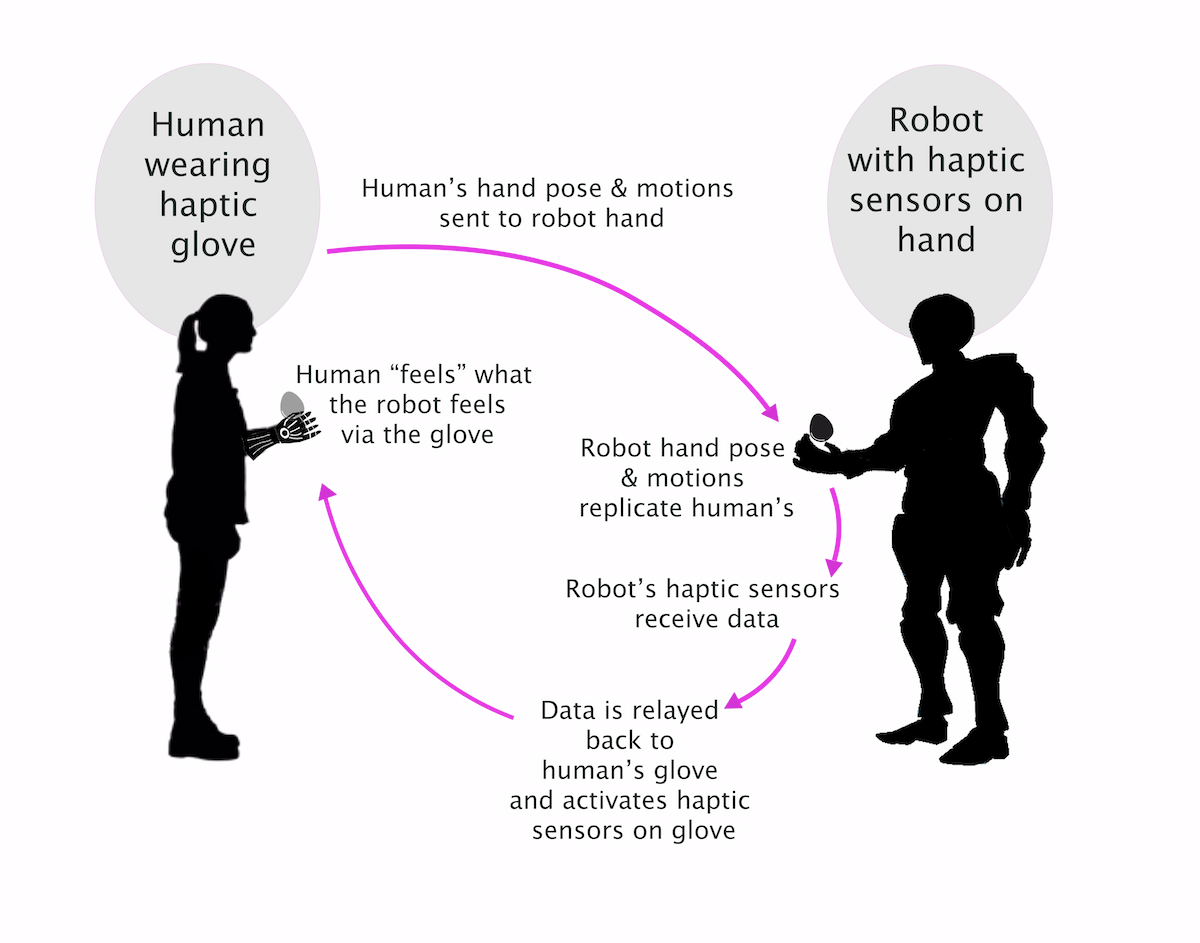

Robotic Avatars operate under a very different set of circumstances than the autonomous control loop described in Figure 1. Rather than the haptic sensations being interpreted by the robot’s “brain” there is now a human in the loop. And for that human to make the decisions about what to do with the haptic information coming in from the haptic device(s) integrated into the robot, they too must wear a haptic device. The sensory signals being felt by the robot in its location must be replicated in some manner by the haptics device worn by the human. The human then “feels” what the robot at the other end is feeling, whether that is the weight and texture of a ball in the palm of a hand, or a stroke along a part of the arm.

This situation requires not one, but two analogous haptic devices in synchronization – one that is part of the robot and the one worn by the human.

A robot being used as an Avatar does not need to have programmed control mechanisms to interpret the sensations coming from a single haptic device because the human does the interpretation. However, the way an Avatar conveys haptic cues to the human is an entirely different problem than the control loop shown in Figure 1.

Figure 3: Human in the control loop for an Avatar robot.

A good example of this is the way a haptic glove worn by a human works in sync with a haptically enabled robot hand. What happens to the robot hand in a situation like the robot squeezing a soft ball results in signals being sent back to the human’s glove. The human feels as if that soft ball is in their hand with their fingers squeezing it.

This real time correspondence is the key to the psychological experience of physical presence. Currently, not all haptics sensations can be conveyed this way as it is too complex for every part of one’s body to be covered with haptics devices, and the same is true for a robot’s form. However, a subset of such sensations can be adequately relayed to the human controlling the Avatar, allowing for a more robust experience.

As a baseline, this type of two way haptics capabilities is what we expect to see integrated in every team’s Avatar solution for the ANA Avatar XPRIZE Finals testing. Integrating haptic technology along with the more widely available visual and audio components will serve to accelerate the usefulness of robotic Avatars for the many potential use cases they are expected to serve in the future.

Imagine that future, where travel to anywhere is as simple as connecting to a robotic Avatar, allowing anyone to share their expertise, visit loved ones, or enjoying exotic locations as if they were physically present. You won’t even have to pack a suitcase!

The final phase of the ANA Avatar XPRIZE is coming soon. Sign up for the avatar newsletter to stay tuned to the latest from the future of telepresence, and be on the lookout for a competition announcement next month!